Research

'Um… so, today we'll, um.., learn about': Neural Tracking of Real-Life Spoken Language

Whether at school, university, or work, we hear lectures from other people every day. Due to the pandemic, schools rely again more on frontal teaching in recent weeks and months. Due to hygiene regulations, group work and other alternative teaching formats can often no longer be implemented. To benefit from this kind of knowledge transfer, children and adults have to focus their attention on individual speakers over extended periods of time. How well this works depends on various factors. With an exciting topic, we can still listen to a boring presentation. Frequent interruptions in the flow of speech by 'uhms' make it difficult for us to follow a lecture. If we cannot understand the speaker well because of background noise, we will lose interest even in our favorite topic. Dealing with these various disorders varies from person to person. In this German-Israeli cooperation project, we examine the attention of the listener in realistic lecture situations. Methodical and technical developments in recent years allow brain activity to be directly related to natural language. This can be used to examine, for example, how we process natural language or whether we are listening to a speaker. With the advancement of mobile electroencephalography (EEG), examining neurophysiological processes in everyday life is coming within reach. We would like to further develop these procedures by gaining a better understanding of the many factors that differ between the laboratory and the classroom. We want to know how the peculiarities of a speaker and the background noise affect the listener. To answer these questions, we measure brain activity using EEG while test subjects listen to lectures. In the first project phase, we characterize video recordings of lectures in Hebrew and German and create a detailed description of the language material. In contrast to the kind of language that we know from television or radio, language in everyday situations is much more unpolished. For example, a lecturer hesitates, repeats parts of a sentence, does not finish a sentence or uses filler words such as ‘uhm’. In the second phase of the project, we use EEG to investigate how the speaker-specific factors identified in phase 1 affect the listeners and how they influence attention. In the third project phase, we then deal with the influence of additional external noise on the listener, such as the hum of the air conditioning or the noises of other children. We create the necessary methodological foundations to examine attention directly in the classroom or lecture hall.

Sound perception in every day life

We are constantly surrounded by sounds. Some of these sounds are important to us, others not. Some sounds are pleasant (like music, the voice of a loved one), others can drive us crazy (like the neighbor's lawn mower or a dripping tap). On the other hand, we do not hear every sound that is there, we ignore many of them. How much of the surrounding sounds we perceive varies from person to person. While the one person feels disturbed even by the slightest noise in the concentration, other people do not seem to notice the noise around them at all.

We want to understand how many of the sounds that surround us are actually perceived and how people differ in their perception.

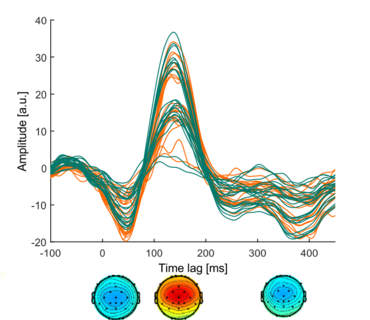

To investigate this we use brainwave measurements (EEG). This allows us to examine perception processes without overtly asking the person and thereby changing their attention (as soon as I will ask you whether you notice the jackhammer in front of the house you will notice it).

Ear-EEG to measure brain activity

Our goal is to measure brain activity in everyday life. We use special electrodes developed by us, which are attached around the ear. With these electrodes we can inconspicuously measure EEG over long periods of time. Unfortunately, we can not measure the activity of the entire brain with these electrodes, but only a part of it. In our research, we would like to better understand which brain processes we can measure with these electrodes and which remain hidden from us. Arnd Meiser is working on this question.

Auditory work strain in the operating room

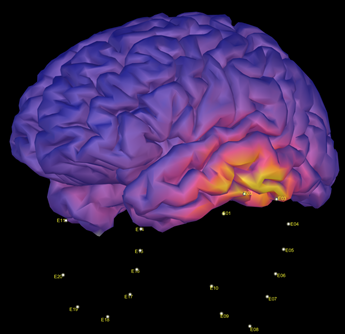

Surgery is a medical discipline that is characterized by the performance of complex motor tasks under time pressure and where mistakes can have serious consequences for patients. In addition, surgical staff are exposed to a stressful soundscape in the operating room (OR). The soundscape in the OR is characterized by a multitude of overlapping sounds, such as the continuous humming of ventilators, monitor beeps, alarms and conversations. We use electroencephalography (EEG) to record the response to the various aspects of the soundscape. In our studies, we have linked the neuronal activity measured during simulated surgical tasks to performance and self-reports. This brings us closer to the question of which sounds are perceived as disturbing and how they are processed.

Hardware Development - Beyond the lab EEG

Electroencephalography (EEG) enables the measurement of brain activity using electrodes placed on the scalp. In laboratory settings, specialized electrode caps ensure high signal quality. However, these caps are not well suited for EEG recordings in everyday life, where comfort, mobility, and ease of use are critical.

To address this challenge, we develop the necessary hardware for studying auditory perception in real-world environments. This led to the development of nEEGlace, a neck-based EEG system that uses ear-electrodes. Our first prototype integrated a mobile EEG amplifier into a neck loudspeaker, demonstrating the feasibility of this approach. This work was published in the Open Hardware Journal, and we successfully recorded EEG in everyday life.

We are continuously improving this concept and are now developing a new nEEGlace system from scratch. This next-generation version is designed to simultaneously record both EEG and audio data, allowing us to investigate how the brain processes complex auditory environments.