When a self-driving car behaves differently in a classic dilemma situation than test subjects would, this discrepancy becomes visible in brain activity. Researchers from the Department of Psychology at the University of Oldenburg demonstrated this in a study. The findings are intended to contribute to better acceptance of autonomously controlled vehicles by people over the long term. The study results were reported by the team from the Experimental Psychology lab led by Prof. Dr. Christoph Herrmann in the journal Scientific Reports.

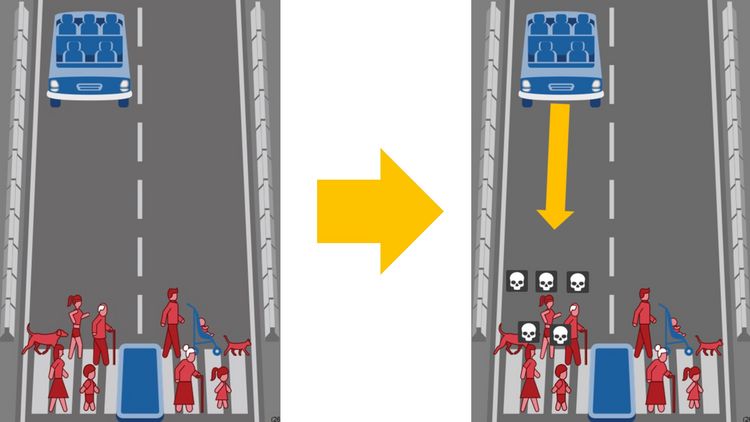

How should a self-driving car behave when a collision with either a child or a group of people is unavoidable? What if the life of the driver could be saved, but a pet would have to be run over? Dilemmas like these are the heart of the famous Moral Machine test, which the Massachusetts Institute of Technology (USA) used several years ago to evaluate the answers from millions of people who participated in the test online. The Oldenburg researchers have now repeated this experiment—with two crucial adjustments. Firstly, they confronted their test subjects with the supposed decision made by the artificial intelligence controlling the car after the subjects made their choice. Secondly, they measured the brainwaves of their participants during this process. "The more complex a process is, the longer the processing time in the brain," explains Maren Bertheau, first author of the study and PhD candidate under Herrmann. While the mere perception of a visual stimulus becomes visible in brainwave measurements as early as about 0.1 seconds, more complex cognitive processes, such as recognizing the image, cause detectable fluctuations after about 0.3 seconds. In the study's test, participants also showed a significant fluctuation after 0.3 seconds—a moment which the researchers believe is when the brain deciphers whether human and machine acted alike. Notably, if the machine's actions deviate, there is up to a two-microvolt higher excitation according to the team's measurements. This discrepancy between congruent and non-congruent decisions persists—as the excitation flattens—for several milliseconds.

"We are looking for initial approaches that will eventually allow us to measure in everyday life whether people agree with the decision of a self-driving car," explains Herrmann. It is not about steering a vehicle with the power of one's thoughts, but about adapting the car's driving behavior as precisely as possible to the individually desired actions. It might be conceivable in the near future to measure, using mobile brainwave devices, whether an overtaking maneuver seems too risky or if the traffic light appears too orange to the eyes of the driver. "Thus, a driving profile would be created that increasingly corresponds to the comfort, sense of security, and other demands of the users," says Herrmann.

The researchers also emphasize the limitations of their current study. Their results are based on the averaged data from 34 test subjects, meaning they observed consistent activities among a large number of participants that provide clear indications of the described relationships. However, to be applicable in everyday life, the described brain activities would have to be measurable in all individuals and at all times. Whether and how this can be achieved must be the subject of further investigations. This also applies to the question of whether the assessment of traffic decisions, which are less emotionally charged than dilemma situations, can also be clearly deduced from brainwaves. The Oldenburg researchers have already addressed this question and conducted another experiment: Participants had to evaluate whether a gap was large enough for a left turn. The results of this study will be published soon.

Please note: This text has been machine-translated.